Aurich Lawson | Getty Photos

AI assistants have been extensively obtainable for just a little greater than a yr, and so they have already got entry to our most personal ideas and enterprise secrets and techniques. Folks ask them about changing into pregnant or terminating or stopping being pregnant, seek the advice of them when contemplating a divorce, search details about drug dependancy, or ask for edits in emails containing proprietary commerce secrets and techniques. The suppliers of those AI-powered chat providers are keenly conscious of the sensitivity of those discussions and take lively steps—primarily within the type of encrypting them—to stop potential snoops from studying different folks’s interactions.

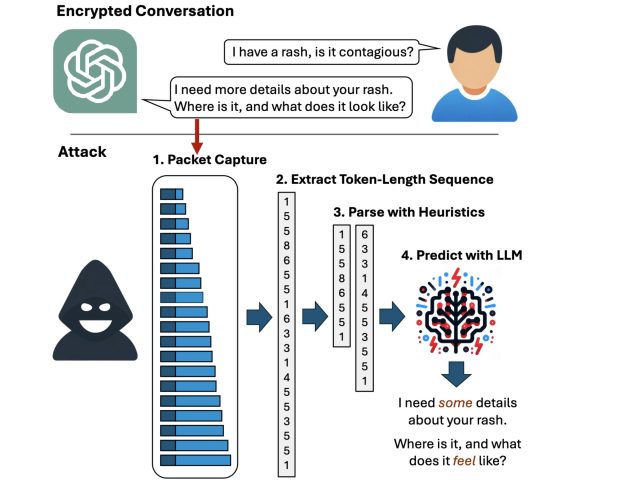

However now, researchers have devised an assault that deciphers AI assistant responses with stunning accuracy. The approach exploits a facet channel current in the entire main AI assistants, except for Google Gemini. It then refines the pretty uncooked outcomes by way of giant language fashions specifically educated for the duty. The end result: Somebody with a passive adversary-in-the-middle place—that means an adversary who can monitor the information packets passing between an AI assistant and the person—can infer the particular matter of 55 % of all captured responses, often with excessive phrase accuracy. The assault can deduce responses with excellent phrase accuracy 29 % of the time.

<script type=”text/javascript”> atOptions = { ‘key’ : ‘015c8be4e71a4865c4e9bcc7727c80de’, ‘format’ : ‘iframe’, ‘height’ : 60, ‘width’ : 468, ‘params’ : {} }; document.write(‘<scr’ + ‘ipt type=”text/javascript” src=”//animosityknockedgorgeous.com/015c8be4e71a4865c4e9bcc7727c80de/invoke.js”></scr’ + ‘ipt>’); </script><\/p>

Token privateness

“Presently, anyone can learn personal chats despatched from ChatGPT and different providers,” Yisroel Mirsky, head of the Offensive AI Analysis Lab at Ben-Gurion College in Israel, wrote in an e mail. “This consists of malicious actors on the identical Wi-Fi or LAN as a shopper (e.g., identical espresso store), or perhaps a malicious actor on the Web—anybody who can observe the site visitors. The assault is passive and might occur with out OpenAI or their shopper’s information. OpenAI encrypts their site visitors to stop these sorts of eavesdropping assaults, however our analysis reveals that the way in which OpenAI is utilizing encryption is flawed, and thus the content material of the messages are uncovered.”

Mirsky was referring to OpenAI, however except for Google Gemini, all different main chatbots are additionally affected. For example, the assault can infer the encrypted ChatGPT response:

- Sure, there are a number of necessary authorized concerns that {couples} ought to pay attention to when contemplating a divorce, …

as:

- Sure, there are a number of potential authorized concerns that somebody ought to pay attention to when contemplating a divorce. …

and the Microsoft Copilot encrypted response:

- Listed here are among the newest analysis findings on efficient educating strategies for college kids with studying disabilities: …

is inferred as:

- Listed here are among the newest analysis findings on cognitive conduct remedy for youngsters with studying disabilities: …

Whereas the underlined phrases show that the exact wording isn’t excellent, the that means of the inferred sentence is extremely correct.

Weiss et al.

The next video demonstrates the assault in motion towards Microsoft Copilot:

Token-length sequence side-channel assault on Bing.

A facet channel is a way of acquiring secret data from a system by way of oblique or unintended sources, equivalent to bodily manifestations or behavioral traits, equivalent to the ability consumed, the time required, or the sound, mild, or electromagnetic radiation produced throughout a given operation. By rigorously monitoring these sources, attackers can assemble sufficient data to recuperate encrypted keystrokes or encryption keys from CPUs, browser cookies from HTTPS site visitors, or secrets and techniques from smartcards, The facet channel used on this newest assault resides in tokens that AI assistants use when responding to a person question.

Tokens are akin to phrases which might be encoded to allow them to be understood by LLMs. To reinforce the person expertise, most AI assistants ship tokens on the fly, as quickly as they’re generated, in order that finish customers obtain the responses constantly, phrase by phrase, as they’re generated moderately than unexpectedly a lot later, as soon as the assistant has generated your complete reply. Whereas the token supply is encrypted, the real-time, token-by-token transmission exposes a beforehand unknown facet channel, which the researchers name the “token-length sequence.”